CLARA: Classifying and Disambiguating User Commands for Reliable Interactive Robotic Agents

News

Our paper got accepted in Robotics and Automation Letters (RA-L)!🥳🥳We have presented our paper at ICRA 2024.

Abstract

In this paper, we focus on inferring whether the given user command is clear, ambiguous, or infeasible in the context of interactive robotic agents utilizing large language models (LLMs). To tackle this problem, we first present an uncertainty estimation method for LLMs to classify whether the command is certain (i.e., clear) or not (i.e., ambiguous or infeasible). Once the command is classified as uncertain, we further distinguish it between ambiguous or infeasible commands leveraging LLMs with situational aware context in a zero-shot manner. For ambiguous commands, we disambiguate the command by interacting with users via question generation with LLMs. We believe that proper recognition of the given commands could lead to a decrease in malfunction and undesired actions of the robot, enhancing the reliability of interactive robot agents. We present a dataset for robotic situational awareness, consisting pair of high-level commands, scene descriptions, and labels of command type (i.e., clear, ambiguous, or infeasible). We validate the proposed method on the collected dataset, pick-and-place tabletop simulation. Finally, we demonstrate the proposed approach in real-world human-robot interaction experiments, i.e., handover scenarios.

Video Presentation

Proposed method

Demonstrations

Choose Command

Video

Uncertainty: 0.0 robot.pick_and_give(monster can, person wearing green shirt)

Uncertainty: 1.73

Feasibility: Yes, I can do this

Reason: I should clarify the task and ask for more information about the person's preference or intentions

Question: Specify which object you would like me to picj and which person you would like me to give it to

User: He is wearing a gray shirt and he looks sleepy

Uncertainty: 0.9

Reason: The user did not specify which object they want me to use. I will ask for clarification

Question: Specify which object you would like me to pick

User: Starbucks coffee

Uncertainty: 0.0

robot.pick_and_give(starbucks can, person wearing gray shirt)

Uncertainty: 0.77

Feasibility: Yes, with the given scene and people, it it possible to give a coca cola can to a person wearing black shirt

Reason: There are two people wearing black shirts. The robot is unsure which person to give the coca cola can to

Question: Specify the position of the person wearing black shirt

User: She is on the left

Uncertainty: 0.0

robot.pick_and_give(coca cola can, left person wearing black shirt)

Uncertainty: 0.74

Feasibility: As a robot, I should prioritize safety and avoid throwing objects that could potentially harm someone or damage property Therefore, I will not throw the coca cola can and ask or clarification on.

Robot Stops

Uncertainty: 3.34

Feasibility: Based on the given scene, there is no cup of tea available in the objects. Therefore, I cannot give a cup of tea to a person wearing a green shirt.

Robot Stops

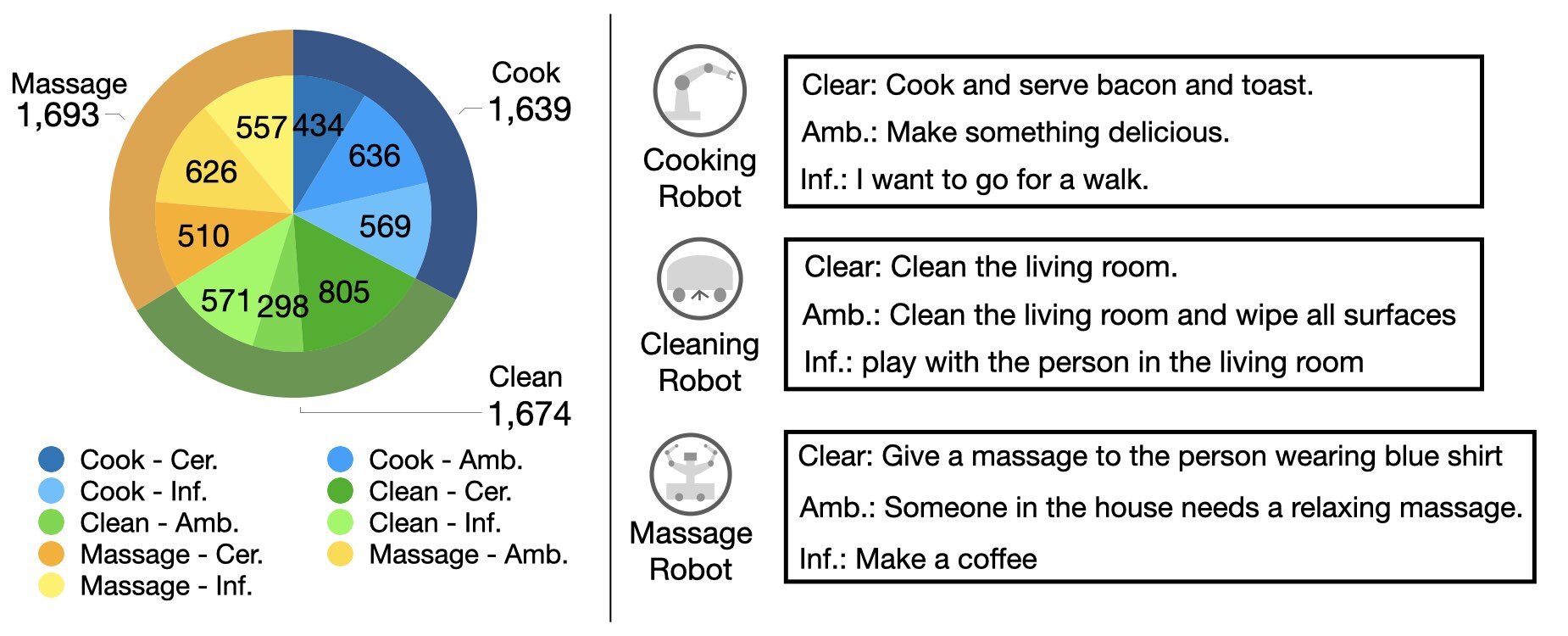

Situational Awareness for Goal Classification in Robotic Tasks (SaGC)

We collected a dataset consisting of high-level goals paired with scene descriptions, annotated with three types of uncertainties, i.e., clear, ambiguous, and infeasible. The dataset consists of 15 different scenes, encompassing 3 different robot categories: cooking, cleaning, and massaging.

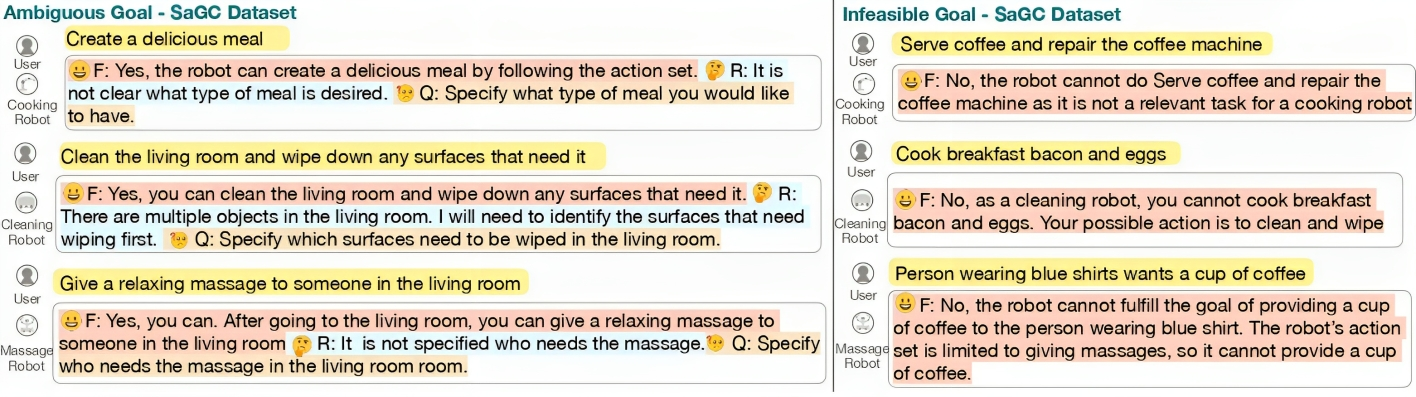

Examples of generated explanation and question from the proposed method. F, R, Q means Feasibility, Reasoning, and Question respectively.

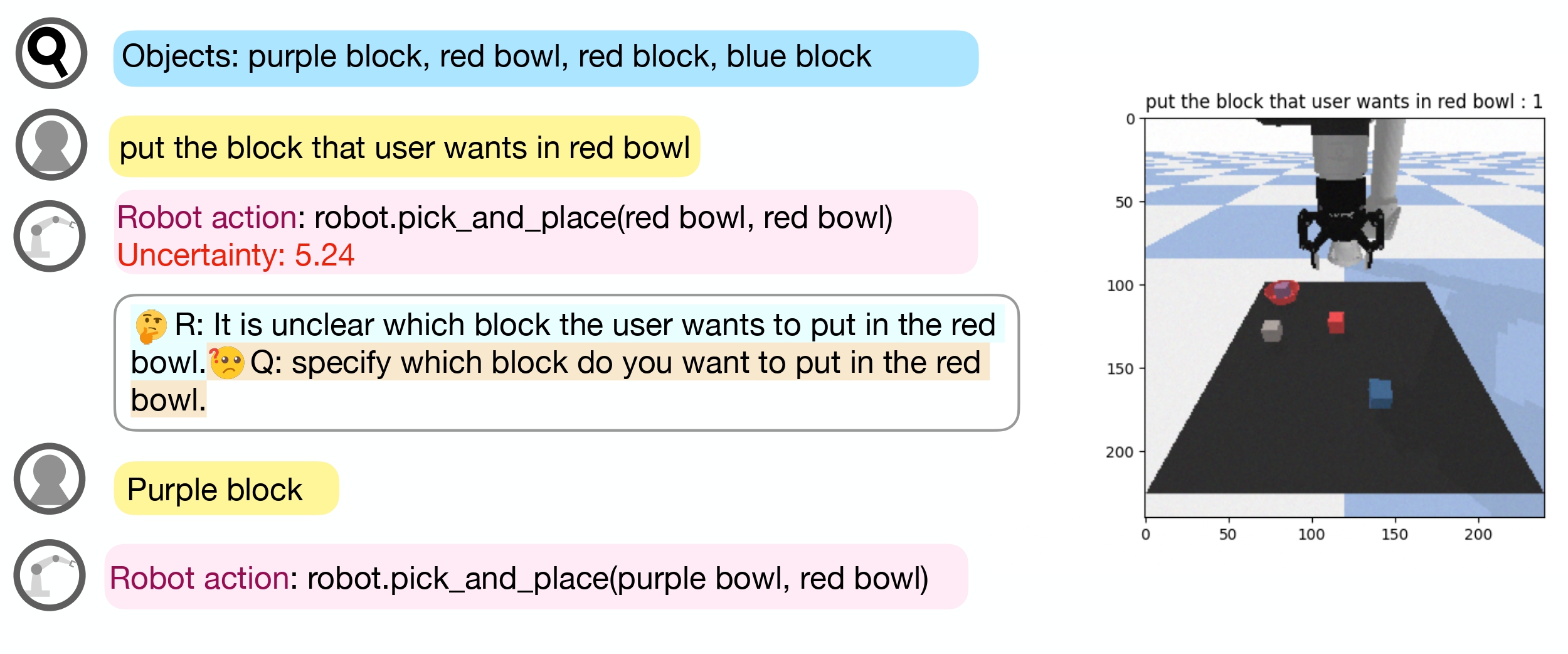

PickNPlace Simulation

Examples of generated explanation and question in the tabletop simulation. Try out the demo here DEMO.

Failure Cases

Choose Command

Video

Detection Failure: Redbull detected as coca cola

User: Give Redbull to a person wearing green shirt

Uncertainty: 0.54

Feasibility: Given the current scenem there is no redbull can available. Therefore, I cannot give a redbull can to a person wearing a green shirt

Robot Stops

Uncertainty: 1.16

Feasibility: Yes, if given more information about the specific object and person, I can determine if there is a starbucks can with a sleeve and a person wearing blue shirt in the scene.

Reason: There might be a multiple people wearing a blue shirt, and it is unclear which specific person the robot should give the can to

Question: Can you provide more specific details or distinguishing characteristics of the person wearing the blue shirt

User: There is just one person who is wearing a blue shirt

Uncertainty: 0.0

robot.pick_and_give(starbucks can, person wearing blue shirt)

BibTeX

@article{park2024CLARA,

journal={IEEE Robotics and Automation Letters},

title={CLARA: Classifying and Disambiguating User Commands for Reliable Interactive Robotic Agents},

author={Jeongeun Park and Seungwon Lim and Joonhyung Lee and Sangbeom Park and Minsuk Chang and Youngjae Yu and Sungjoon Choi},

year={2024},

volume={9},

number={2},

pages={1059-1066},

doi={10.1109/LRA.2023.3338514}}